Ready to build the right product faster? This guide walks a friendly, practical path through planning, mvp development, and early release so your team learns from real users quickly.

The minimum viable product is the smallest release that yields maximum validated learning. Iconic examples like Zappos proved demand with manual fulfillment, and data from CB Insights shows market need often decides startup success.

We cover core phases: clarify the problem and value, study the market, define an early audience, map user flows, and pick the features that matter most.

Execution matters. Assemble the right team, choose fitting technology, set a realistic budget, and adopt an iterative development process. Keep usability, performance, and security in your quality bar even when you move fast.

Key Takeaways

- Focus on validated learning, not perfection.

- Prioritize core features that deliver clear value.

- Use lean testing and real user feedback early.

- Choose launch style based on risk and reach.

- Measure engagement, retention, and conversion metrics.

- Iterate fast with a prioritized backlog.

- Real examples and data ground every recommendation.

What Is a Minimum Viable Product and Why It Matters Today

An MVP is a launchable product version designed to test the riskiest assumptions with minimal waste. It focuses on the smallest set of capabilities that deliver clear value and generate real usage signals.

Minimum doesn’t mean sloppy. A minimum viable product must be reliable and usable enough to attract early adopters. That way you collect credible feedback and learn fast.

Why it matters now: fast learning cuts development costs and shortens time to market. Teams validate demand before building costly features. CB Insights shows lack of market need often kills startups, so early validation protects the business.

- Test assumptions with real users.

- Trim waste and focus on core functionality.

- Turn signals into roadmap decisions.

| Aspect | MVP Goal | Benefit |

|---|---|---|

| Core features | Deliver core value | Faster product-market fit |

| Usability | Be reliable for early adopters | Credible feedback |

| Cost | Minimize upfront spend | Lower business risk |

Remember the Zappos example: manual work proved demand before automation. Treat the MVP as a repeatable process — ship, measure, learn — and use those lessons in future development.

PoC, Prototype, and MVP: Choosing the Right Starting Point

Choose the right early artifact depending on whether your greatest risk is technical, usability, or market demand.

When technical feasibility needs proving

Proof of Concept (PoC) verifies that a core technology or integration can work. Use a PoC when the underlying system or third-party API could fail. PoCs stay internal and help decide if investment is justified.

When design and flows must be refined

Prototype is a fast, clickable model for testing interactions and clarity. Use prototypes for user testing, stakeholder demos, and investor conversations. They sharpen design and reveal UX gaps before heavy development.

When a releasable version is needed

Minimum viable product is a releasable version that focuses on must-have value and real-world testing. An MVP gauges market demand, pricing willingness, and core feature fit with early users.

- Sequence rule: PoC for tech risk, prototype for experience, MVP for market validation.

- Mix approaches when useful: a concierge or manual backend can stand in during testing.

- Document learning goals at each stage so the product roadmap stays focused on validated insight.

how to launch an MVP step by step

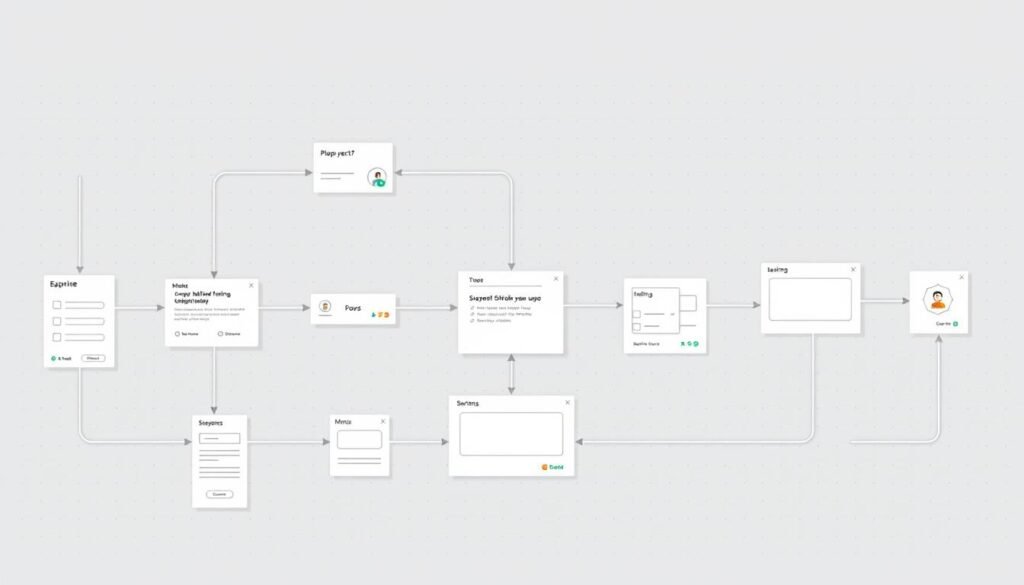

A practical roadmap begins by turning a vague idea into measurable learning goals. Start with a clear problem and the single value you must prove. Define the audience and capture core user flows that show the simplest path to that value.

From defining the problem to iterating after launch

Plan the build: separate must-haves from nice-to-haves and set team, tech, timeline, and budget guardrails. This reduces rework and keeps the product lean.

Develop iteratively with continuous QA and frequent demos. Choose an approach—soft, hard, or silent—that matches risk and reach. Instrument analytics and feedback channels before release so you gather usable signals from day one.

- Prioritize features by impact and effort.

- Measure engagement, retention, conversion, and CAC.

- Feed insights into a living backlog and release quickly.

| Stage | Goal | Key metric |

|---|---|---|

| Define | Problem & value | Conversion intent |

| Build | Core product features | Activation rate |

| Iterate | Improve fit | Retention |

Shipping a viable product is the start of a learning process. Keep cycles short: deploy, observe, talk with users, and refine for success.

Define the Problem and Value Proposition

Pinpoint the main pain your idea aims to remove, then decide if that pain is already felt or must be created.

Distinguish real, existing problems from manufactured ones. Existing problems are familiar pains users accept as costly or annoying. For example, Flo answered a clear information gap in women’s health. Manufactured problems require new behaviors and education. Telemedicine adoption rose sharply during COVID‑19, showing behavior can change when benefits are obvious.

Existing problems vs manufactured problems

Validate intensity and frequency with conversations, surveys, and simple observation. Ask how often the problem occurs and what users now do to cope.

When the problem is manufactured, plan higher onboarding and marketing effort. Ensure the benefit outweighs the cost of behavior change.

Crafting a compelling value proposition for your target audience

Frame your value as a crisp promise: who you serve, the outcome you deliver, and why you’re better than alternatives.

- Who: define the target audience and typical scenario.

- What: state the core value in one line (time saved, errors cut, revenue gained).

- Why: explain the unique advantage or tradeoff.

Keep the MVP scope focused on delivering that promise, not a feature catalog. Document assumptions and measurable outcomes so later rounds of mvp development can validate or discard them.

| Focus | Question | Example |

|---|---|---|

| Problem type | Existing or manufactured? | Flo: existing; Telemedicine: manufactured (was accelerated) |

| Validation method | How to prove it hurts enough | Interviews, surveys, observation |

| Value metric | How success is measured | Time saved, errors reduced, revenue impact |

Analyze the Market and Competitors

Studying rivals reveals unmet demand and clear opportunities for a focused, high-value offering.

Size up the landscape. Research who serves your audience today, their pricing, and their on-ramps. Look for differences in positioning and customer promises.

Choose a strategy lane that matches team strengths: cost leadership, differentiation, or a focused play. Each approach shapes product scope, marketing, and go-to-market choices.

Finding your place: cost leadership, differentiation, and focus

Use competitor benchmarks as minimum acceptable performance targets. That helps set usability and design thresholds for a viable product.

Spotting gaps competitors overlook

Scan reviews, support forums, and social channels for recurring complaints. Those pain points are high-value openings your mvp can address quickly.

“Many product launches fail because segmentation was too broad. Narrow the initial audience and win a niche.”

- Map competitors’ onboarding and friction points; remove those for faster activation.

- Consider geographic or industry nuances that affect adoption and compliance.

- Validate segment size and accessibility before committing build resources.

| Focus | Question | Action |

|---|---|---|

| Positioning | Who is served? | Define a narrow target audience and message |

| Gaps | What is broken? | Prioritize fixes that deliver clear value |

| Benchmarks | Performance goals | Set measurable thresholds from competitors |

Translate market data into crisp positioning and a focused feature set. That increases the odds of product success and gives customers a reason to switch.

Identify Your Target Audience and Early Adopters

Start by naming the specific people who feel the pain your product solves and map what success looks like for them.

Create detailed buyer personas that go beyond age and job title. Include motivations, jobs‑to‑be‑done, and clear success criteria.

Buyer personas and early evangelists

Find early adopters who feel the problem acutely. They will try new solutions and give the candid feedback you need for mvp development.

- Build personas with pain points, triggers, and common objections.

- Design a landing page that explains your value and captures emails or phone numbers for early access.

- Seed awareness in niche forums, founder communities, and platforms like Product Hunt to recruit motivated testers.

- Offer lightweight onboarding or concierge support for the first customers to increase learning speed.

- Track sign-up sources and run targeted messages tailored to each persona’s language and objections.

Be transparent with early evangelists about product plans and show how their input shapes the roadmap. Use their insights to de-risk assumptions before you build mvp at scale.

| Action | Goal | Metric |

|---|---|---|

| Persona research | Match product to real needs | Conversion rate |

| Landing page | Collect high-quality leads | Email/phone sign-ups |

| Community seeding | Find motivated users | Referral & activation |

Map the User Flow for a Better User Experience

Trace each path your audience takes when they try to reach an outcome, and document every fork. This reveals the critical paths that must work first for a minimum viable product.

Focus on core tasks that deliver clear value: sign-up, first use, and task completion. Use low- and mid-fidelity prototypes to validate comprehension before heavy development.

Annotate analytics points where you will measure drop-offs, confusion, and success. Align each flow with personas so language and steps match audience expectations.

Plan for edge cases: errors, retries, and empty states. Tie every flow step to must-have features so scope stays tight and functionality meets needs.

- Trim steps and clarify copy to reduce friction.

- Ensure accessibility and mobile-first design.

- Define success criteria like task time and completion rate.

| Flow Stage | Goal | Metric |

|---|---|---|

| Discover | Find product value | Click-through rate |

| Onboard | Activate first task | Time to first success |

| Complete | Finish main task | Task success rate |

Prioritize Core Features for MVP Development

A tight feature set protects speed and surfaces the signals you need for real decisions. Start by listing every idea and then sort them using a simple prioritization matrix: impact on value, dependency in the user flow, and implementation effort.

Define core as what enables the primary user outcome and the learning goals you must test. Keep conveniences off the initial roadmap unless they unlock essential functionality.

Feature prioritization matrices and backlogs

Create a living backlog and tag items as Must, Should, Could, and Not Now. Use rapid validation—clickable prototypes or concept tests—with early adopters for top Must items.

- Rank by impact on the value proposition and required data collection.

- Consolidate variants (one simple login) rather than build many options.

- Assign a hypothesis and a metric to every selected feature.

Avoiding bloat while protecting essential analytics

Include instrumentation from day one. Track exits, funnels, and key events so testing yields meaningful data.

| Category | Goal | Example |

|---|---|---|

| Must | Enable primary user outcome | Core workflow, event tracking |

| Should | Improve activation or retention | Simplified onboarding, tips |

| Could | Nice-to-have enhancements | Extra integrations, themes |

| Not Now | Defer until validated | Advanced analytics, variants |

Avoid gold-plating. Favor clear, consistent design over novelty. Reassess priorities after real usage and feedback, and let data drive further development.

Plan Your Build: Team, Budget, and Technology Choices

Planning the build sets the boundaries that keep a minimum viable product focused and fast.

Pick a delivery framework that supports learning and change. Agile favors short cycles, frequent releases, and early user feedback, so teams adapt before large investments. Waterfall can work for regulated builds, but it slows iteration and increases risk at this stage.

Agile vs Waterfall

Use Agile when speed, testing, and market feedback matter most. Deliver small increments, run demos, and adjust priorities every sprint.

Reserve Waterfall when compliance or fixed contracts force a linear process.

Native, Cross‑Platform, and Reuse

Choose native when performance or platform features drive value. Consider cross‑platform for broad reach with less time and cost.

- Reuse components and proven UI libraries to cut effort and keep design consistent.

- Integrate third‑party APIs for payments, auth, or analytics instead of building from scratch.

- Align design and engineering on a design system early to speed work and reduce rework.

Define the team clearly: product, design, engineering, QA, and a delivery lead. Tie milestones to learning goals rather than feature counts. Set up CI/CD, security checks, and branching strategies so deployments are fast and safe.

| Decision | Why it matters | Example action |

|---|---|---|

| Framework | Controls pace of development | Choose Agile sprints with demo cadence |

| Tech stack | Affects performance, time, cost | Pick cross‑platform for wide device coverage |

| Budget | Limits scope and risk | Set milestones tied to testing and user learning |

Document assumptions and risks and review them in planning meetings. Regular checkpoints keep stakeholders aligned and let the business react when data suggests a new direction.

Build, Test, and Assure Quality Before Release

Make testing a continuous part of work so each version moves closer to reliable value. That mindset keeps the product useful for real users and protects learning from bad signals.

Usability, performance, and security testing essentials

Start with clear acceptance criteria for every feature and key user flow. That makes quality measurable and removes ambiguity during development.

Use a mix of manual exploratory tests and automated checks for regressions. Run usability sessions to confirm users complete core tasks and read the interface copy as intended.

Validate performance thresholds for load times and responsiveness. Poor speed causes churn faster than missing features.

Assess security basics: authentication, authorization, data storage, and third‑party risks. Test across devices, browsers, and network conditions.

Defining clear success criteria and test cases

Create a concise test plan that maps goals to test cases and expected results. Include edge condition checks for empty states, failed payments, and timeouts.

Instrument logs and monitoring so issues surface quickly after deployment. Run QA in parallel with development and hold regular bug triage meetings.

“A minimal but broken release undermines learning and trust.”

- Acceptance criteria: measurable pass/fail for each flow.

- Test strategy: balance manual, automated, and performance suites.

- Post-release: monitoring, error alerts, and fast rollback plans.

| Area | Focus | Key check |

|---|---|---|

| Usability | Task completion and clarity | Time to first success & task success rate |

| Performance | Responsiveness under load | Page load |

| Security | Data protection basics | Auth flows, encrypted storage, dependency scans |

| Edge cases | Graceful failure handling | Empty states, retries, payment errors |

Treat testing as essential for a minimum viable product and for healthy mvp development. Well-run testing protects the core functionality, design, and experience that let the product gather useful feedback and valid data.

Choose Your Launch Approach: Soft, Hard, or Silent

Picking the right release strategy shapes how quickly you learn from real customers. The choice affects risk, marketing effort, and the clarity of feedback you collect.

Soft release for high-signal feedback

Soft releases limit access to a small segment so the team can gather detailed feedback and fix issues before scaling. This is ideal for early mvp development and refining core features.

Hard release for reach and momentum

Use a hard release when the product is stable, the audience is clear, and marketing resources will drive immediate traction. It creates broad awareness and tests market demand fast.

Silent release to de-risk production

A silent release rolls out quietly to validate stability, regional settings, or configuration without drawing public attention. It reduces risk while teams watch adoption and error rates closely.

- Match approach to goals, budget, and risk tolerance.

- Prepare surveys, in-app prompts, and interviews for feedback collection.

- Set entry and exit criteria and monitor adoption, errors, and engagement from day one.

Early Marketing and Communication Plan

Start your marketing early so the first conversations shape product messaging, not the other way around. Early outreach gathers real objections and highlights the value people care about. That feedback should steer mvp development and future product choices.

Landing pages, Product Hunt, and social channels

Build a focused landing page that explains your value proposition clearly and captures sign-ups with a strong call to action.

- Landing page: concise headline, clear benefits, and a sign-up form for early access.

- Product Hunt / communities: plan timing, hunter outreach, and follow-up to reach active adopters.

- Social: prioritize channels where your target audience already engages and aim for quality conversations.

Collecting sign-ups and nurturing early customers

Set up nurture flows—welcome emails, feature updates, and brief surveys—to keep early customers informed and involved.

Share a transparent roadmap snapshot so people feel part of the journey. Encourage testimonials and user content to validate messaging and boost trust.

- Align marketing promises with actual MVP capabilities to avoid mismatched expectations.

- Iterate messaging based on real questions and objections you hear from prospects and users.

- Track channel performance and double down on sources that drive qualified interest and meaningful feedback.

| Action | Goal | Metric |

|---|---|---|

| Landing page | Collect interest | Sign-ups per week |

| Community launch | Find early adopters | Activation rate |

| Nurture flow | Retain interest | Email open/click rate |

Measure What Matters: Metrics and Feedback Loops

Good measurement separates opinion from insight and points the product team toward real gains. For a minimum viable product, combine quick numbers with direct user stories so decisions rest on both what people do and why they act.

Qualitative vs quantitative data and triangulation

Quantitative metrics show frequency and scale: traffic, activation rates, task completion, and error counts. They tell you where users stumble.

Qualitative feedback reveals motive: interviews, usability sessions, and open comments explain why users behave a certain way.

Practice triangulation by seeking agreement across interviews, tests, and analytics before changing the product. Convergence increases confidence in decisions.

Key KPIs: engagement, retention, conversions, CAC

Pick metrics tied to your learning goals: activation, engagement, retention, conversion, and unit economics like CAC. Track leading indicators—time to value and task success—alongside outcome metrics such as paid conversion.

- Instrument funnels and run cohort analysis to find drop-off points.

- Use structured sessions to surface friction numbers hide.

- Calculate CAC by channel: spend divided by customers acquired.

| Metric | What it shows | Action trigger |

|---|---|---|

| Activation rate | Early user success | Improve onboarding copy or remove friction |

| Retention (7/30 day) | Product value over time | Prioritize features that increase repeat use |

| Conversion rate | Value monetization | Test pricing, messaging, or checkout flow |

| CAC by channel | Acquisition efficiency | Shift spend to high‑ROI channels |

Review metrics regularly, set thresholds for action, and tie every change to a clear hypothesis. Measure to learn, not to defend assumptions, and adapt metrics as the product and market evolve.

Iterate Fast: Turning User Feedback into Product Development

Turn raw user reactions into a steady rhythm of product improvements that move metrics and keep your audience engaged. After release, collect feedback, analytics, and team bets in one place so nothing slips through the cracks.

Organizing the backlog for rapid improvements

Establish a single, prioritized backlog that combines user feedback, analytics findings, and strategic ideas. Score each item by impact, confidence, and effort so the highest-leverage changes ship first.

- Convert feedback into hypotheses. Write a crisp problem statement, propose a small test, and define success metrics.

- Run short sprints. Keep demo and retro cadences tight so the team learns in days, not months.

- Protect the core experience. Fix critical friction before adding new features or capabilities.

- Pair design and engineering early. Joint solutions cut rework and preserve a consistent user experience.

- Keep technical debt visible. Schedule refactors so velocity and functionality stay healthy.

Close the feedback loop: tell users what changed and why. Continuously validate that each improvement moves the metrics you care about, and roll back changes that don’t. Treat iteration as your business advantage—fast learning beats fast building when the goal is a viable product that serves real needs.

Conclusion

Deliver a usable core that shows real value and invites honest user feedback. A strong minimum viable product balances minimal scope with reliable design so teams gather valid signals fast.

Follow the process: define the problem and audience, research the market, map flows, pick core features, plan budget and team, build, test, and measure. Choose a launch approach that fits risk and instrument analytics before users arrive.

Protect essential metrics—engagement, retention, conversion, CAC—and act quickly on insights. Fast, disciplined iteration on small changes compounds into real product success. Assemble your team, scope the core, and start learning with real customers this quarter.